High available PostgreSQL on kubernetes

This post covers installing PostgreSQL in a highly available manner on kubernetes. While this was quite an adventure in the past, CloudNativePG - an awesome k8s operator for Postgres makes this rather easy and straight-forwards.

The TLDR is found in the Summary

- CloudNativePG - the best PostgreSQL kubernetes operator so far

- Install High available Postgres In Kubernetes

- How many databases per cluster?

- Whats next?

- Summary

PostgreSQL is my favorite database system. Reasons for that are:

- Huge and professional community

- Stable - it's one of this install and forget technologies - it is almost impossible to break it

- Rich ecosystem. PostgreSQL provides extensions for just anything. Geospatial data, Timeseries, Column stores, backups, monitoring, etc.

- Foreign Data Wrappers. They allow to "connect" to other data stores and postgres can act as interface to other data

- Rich SQL language support

- Scalability. If done right, PostgreSQL can scale incredibly well also for very large databases

So if anybody asks, what database to use, PostgreSQL is almost always one of the right answers.

Nevertheless, one has to admit, that operating PostgreSQL is clearly targeted to bare-metal installations. With the rise of kubernetes, PostgreSQL was always a little bit a misfit. A lot of folks just ran kubernetes alongside a bare-metal PostgreSQL instance. Alternatively, some very smart people created incredible systems for high available, auto-failover PostgreSQL on kubernetes. One of the best of these tools was zalando`s patroni or spilo.

Up until recently I successfully used spilo to run high available PostgreSQL instances which survived several catastrophic infrastructure events. However - even I as hardcore PostgreSQL fan have to admin, that operating spilo was kind of a pain in the ass. There are a lot of moving parts and manual intervention (eg. for updates) was hard.

CloudNativePG - the best PostgreSQL kubernetes operator so far

That's where CloudNativePG. CloudNativePG was developed and sponsored by the awesome people at EDB. CloudNativePG attempts to tackle this operational struggles of current postgres - k8s solutions. It provides a kubernetes operator that can bootstrap a high available PostgreSQL database cluster, using only kubernetes APIs and native streaming replication.

The clusters can run in private and public clouds and even hybrid-cloud environments.

As from their github repository

1The goal of CloudNativePG is to increase the adoption of PostgreSQL, one of the most loved DBMS in traditional VM and bare metal environments, inside Kubernetes, thus making the database an integral part of the development process and CI/CD automated pipelines.

Again, citing what they write on their github repository:

The operator is responsible for managing the status of the Cluster resource, keeping it up to date with the information that each PostgreSQL instance manager regularly reports back through the API server. Such changes might trigger, for example, actions like:

-

a PostgreSQL failover where, after an unexpected failure of a cluster's primary instance, the operator itself elects the new primary, updates the status, and directly coordinates the operation through the reconciliation loop, by relying on the instance managers

-

scaling up or down the number of read-only replicas, based on a positive or negative variation in the number of desired instances in the cluster, so that the operator creates or removes the required resources to run PostgreSQL, such as persistent volumes, persistent volume claims, pods, secrets, config maps, and then coordinates cloning and streaming replication tasks

-

updates of the endpoints of the PostgreSQL services that applications rely on to interact with the database, as Kubernetes represents the single source of truth and authority

-

updates of container images in a rolling fashion, following a change in the image name, by first updating the pods where replicas are running, and then the primary, issuing a switchover first

Furthermore, CloudNativePG provides resources for automatic backups and connection pooling.

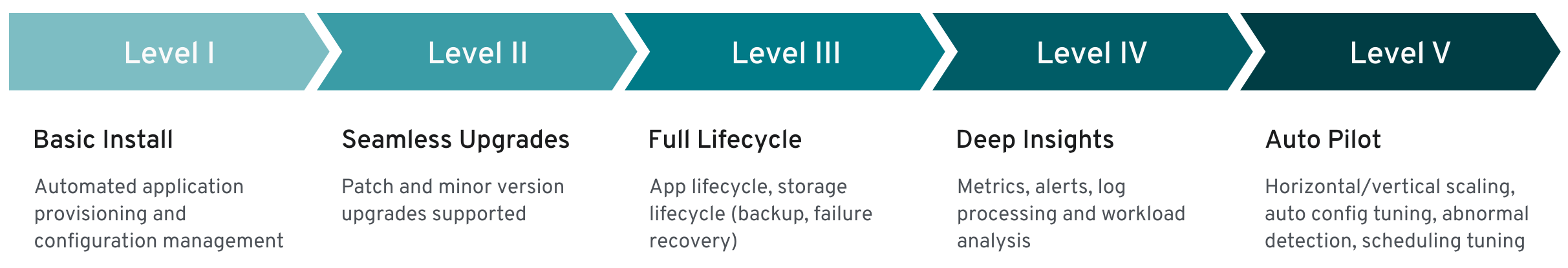

In summary, the CloudNativePG operator provides the following levels of operations

k3s high available multi-server deployment with embedded storage

k3s high available multi-server deployment with embedded storage

Install High available Postgres In Kubernetes

Now that we know what CloudNativePG is, let's start with a fresh install.

Prerequisites

For this guide to work, you'll need a running kubernetes cluster with at least 3 worker nodes and kubectl installed.

Finally, make sure that krewthe kubectl extension manager is installed, as described in the crew docs

1. Install the operator

-

For convenience, the folks at EDB provided us with a kubectl plugin called

cnpg. Install it with:1kubectl krew install cnpg -

Generate the operator yaml manifest. The -n flag defines the namespace where the operator is deployed to and the replicas flag tells us how many replicas of the operator should be installed (note: number of operator replicas - not postgres instances)

1kubectl cnpg install generate -n devops-system --replicas 3 > operator-manifests.yamlThis will create a file called

operator-manifests.yaml. -

(Optional) The operator can be deployed using these manifests. However, the operator comes without any kubernetes tolerations. If you have separate control-plane and worker kubernetes nodes, you might want to add some tolerations to allow scheduling of the operator to the control-plane nodes. This is - however - highly optional. If you want to add some tolerations, open the file and add the tolerations to the operator deployment. Look for the following deployment (faaaar down in the file):

1apiVersion: apps/v12kind: Deployment3metadata:4creationTimestamp: null5labels:6 app.kubernetes.io/name: cloudnative-pg7name: cnpg-controller-manager8namespace: devops-systemAt the very end of the specification of the deployment, add the tolerations (see highlighted lines below):

1 - name: cnpg-pull-secret2 securityContext:3 runAsNonRoot: true4 seccompProfile:5 type: RuntimeDefault6 serviceAccountName: cnpg-manager7 terminationGracePeriodSeconds: 108 tolerations:9 - effect: NoSchedule10 key: node-role.kubernetes.io/master11 operator: Exists12 - effect: NoSchedule13 key: node-role.kubernetes.io/control-plane14 operator: Exists15 volumes:16 - emptyDir: {}17 name: scratch-data18 - name: webhook-certificatesThis example tolerations will allow the operator to be scheduled on the control-plane nodes - if they have the well-known taint

node-role.kubernetes.io/control-plane:NoScheduleornode-role.kubernetes.io/master:NoSchedule. -

Deploy the manifests:

1kubectl apply -f operator-manifests.yaml

Congratulations: You just deployed the CloudNativePG kubernetes operator.

To check if everything is alright, run kubectl get pods -n devops-system. The output should look similar to

1NAME READY STATUS RESTARTS AGE2pod/cnpg-controller-manager-6448848cc9-25dwj 1/1 Running 0 3m16s3pod/cnpg-controller-manager-6448848cc9-9l4wt 1/1 Running 0 3m16s4pod/cnpg-controller-manager-6448848cc9-pzcjd 1/1 Running 0 3m16s

2. Create a PostgreSQL cluster

Now that our operator is installed, let's create a high available PostgreSQL cluster. We will also bootstrap an application database, add some database users and add some configuration options. All of that can be done in a single yaml-manifest - making setting up a cluster a delight.

The following section shows the manifest - I'll add comments where appropriate for more details.

For defining the secrets, keep in mind that kubernetes expects the secrets to be base64 encoded. On Linux, simply pass your secrets through base64 as follows:

1echo myrealpassword | base64

For the section about postgres settings, I used the toolpgtune to find settings appropriate for my nodes. Please navigate there and use the settings which best fit for your nodes.

1apiVersion: v12type: kubernetes.io/basic-auth3kind: Secret4data:5 password: c2VjcmV0X3Bhc3N3b3Jk #secret_password6 username: YXBwX3VzZXI= #app_user7metadata:8 name: example-app-user9 namespace: postgres-namespace10---11apiVersion: v112kind: Secret13type: kubernetes.io/basic-auth14data:15 password: c2VjcmV0X3Bhc3N3b3Jk #secret_password16 username: cG9zdGdyZXM= #postgres - Note: It NEEDs to be postgres17metadata:18 name: example-superuser19 namespace: postgres-namespace20---21apiVersion: postgresql.cnpg.io/v122kind: Cluster23metadata:24 name: example-cluster25 namespace: postgres-namespace26spec:27 description: "DevOps and more cluster"28 imageName: ghcr.io/cloudnative-pg/postgresql:15.12930 # Number of instances. The operator automatically starts one master31 # and two replicas.32 instances: 33334 # How many seconds to wait before the liveness probe starts35 # Should be higher than the time needed for postgres to start36 startDelay: 3037 # When shutting down, for how long (in seconds) the operator should38 # wait before executing a fast shutdown (terminating existing connections)39 # The operator actually waits for half the time before executing fast shutdown.40 # The other half is used to wait for finishing WAL archiving.41 stopDelay: 1004243 # Example of rolling update strategy:44 # - unsupervised: automated update of the primary once all45 # replicas have been upgraded (default)46 # - supervised: requires manual supervision to perform47 # the switchover of the primary48 primaryUpdateStrategy: unsupervised4950 # These are the settings of postgres. Use pgtune for determining them51 postgresql:52 parameters:53 max_connections: 10054 shared_buffers: 2560MB55 effective_cache_size: 7680MB56 maintenance_work_mem: 640MB57 checkpoint_completion_target: 0.958 wal_buffers: 16MB59 default_statistics_target: 10060 random_page_cost: 1.161 effective_io_concurrency: 20062 work_mem: 13107kB63 min_wal_size: 1GB64 max_wal_size: 4GB65 max_worker_processes: 466 max_parallel_workers_per_gather: 267 max_parallel_workers: 468 max_parallel_maintenance_workers: 26970 # These settings below automatically enable71 # the pg_stat_statements and auto_explain extensions.72 # No need, to add them to the shared_preload_libraries73 pg_stat_statements.max: '10000'74 pg_stat_statements.track: all75 auto_explain.log_min_duration: '5s'7677 # pg_hba.conf file configuration. This line ensures, that, for78 # all databases, all users can connect on the specified IP-Adress79 # The scram-sha-256 method should be used for password verification80 # https://www.postgresql.org/docs/current/auth-pg-hba-conf.html81 pg_hba:82 - host all all 10.244.0.0/16 scram-sha-2568384 bootstrap:85 initdb:86 database: app87 # name of the user who owns the database.88 # There needs to be a secret for this user.89 owner: app_user90 secret:91 name: example-app-user92 # Alternative bootstrap method: start from a backup93 #recovery:94 # backup:95 # name: backup-example9697 superuserSecret:98 name: example-superuser99100 # Template for the PVC. Keep in mind that for storage101 # which is bound directly to a node, the pod can consume as much102 # storage until the disk space is full. The setting here is not a limit.103 storage:104 pvcTemplate:105 accessModes:106 - ReadWriteOnce107 resources:108 requests:109 storage: 10Gi110 volumeMode: Filesystem111112 resources:113 requests:114 memory: "1Gi"115 cpu: "1"116 limits:117 memory: "10Gi"118119# # Optional setting: Only deploy on worker nodes120# nodeSelector:121# kubernetes.io/role: "worker"122123 # Optional setting: For nodes with locally attached storage,124 # When using local storage for PostgreSQL, you are advised to temporarily put the cluster in maintenance mode through the nodeMaintenanceWindow option to avoid standard self-healing procedures to kick in, while, for example, enlarging the partition on the physical node or updating the node itself.125 nodeMaintenanceWindow:126 inProgress: false127 # States if an existing PVC is reused or not during the maintenance operation.128 # When enabled, Kubernetes waits for the node to come up again and then reuses the existing PVC. Suggested to keep on.129 reusePVC: true

Apply the manifest by running kubectl apply -f <your-manifest-filename>.yaml

Check if everything works fine by running kubectl -n postgres-namespace get pods. The output should be similar to

1NAME READY STATUS RESTARTS AGE2example-cluster-1 1/1 Running 0 25m3example-cluster-2 1/1 Running 0 24m4example-cluster-3 1/1 Running 0 19m

AWESOME: We now have a running postgresql cluster with 3 needs, high availability and auto-failover!

You might check on the cluster health, by running:

1kubectl cnpg status -n postgres-namespace example-cluster -v

This will output some nicely formatted information about the general PostgreSQL cluster state.

Additionally, the cnpg kubectl command provides some handy commands:

1 certificate Create a client certificate to connect to PostgreSQL using TLS and Certificate authentication2 completion Generate the autocompletion script for the specified shell3 destroy Destroy the instance named [CLUSTER_NAME] and [INSTANCE_ID] with the associated PVC4 fencing Fencing related commands5 hibernate Hibernation related commands6 install CNPG installation commands7 maintenance Sets or removes maintenance mode from clusters8 pgbench Creates a pgbench job9 promote Promote the pod named [cluster]-[node] or [node] to primary10 reload Reload the cluster11 report Report on the operator12 restart Restart a cluster or a single instance in a cluster13 status Get the status of a PostgreSQL cluster

How many databases per cluster?

In traditional PostgreSQL hosting, multiple databases are part of one database instance or cluster. However, my (and EDBs) suggestion for Kubernetes workloads in general is, to have a separate cluster per database - entirely utilized by a single microservice application.

As per definition of microservices, they should own and manage their data - in their specific database. O the microservice can access the database, including schema management and migrations.

(The following paragraph is directly from CloudNativePG) CloudNativePG has been designed to work this way out of the box, by default creating an application user and an application database owned by the aforementioned application user.

Reserving a PostgreSQL instance to a single microservice owned database, enhances:

- resource management: in PostgreSQL, CPU, and memory constrained resources are generally handled at the instance level, not the database level, making it easier to integrate it with Kubernetes resource management policies at the pod level

- physical continuous backup and Point-In-Time-Recovery (PITR): given that PostgreSQL handles continuous backup and recovery at the instance level, having one database per instance simplifies PITR operations, differentiates retention policy management, and increases data protection of backups

- application updates: enable each application to decide their update policies without impacting other databases owned by different applications

- database updates: each application can decide which PostgreSQL version to use, and independently, when to upgrade to a different major version of PostgreSQL and at what conditions (e.g., cutover time)

While I'd encourage you to keep to this pattern, it's very much possible to use the postgres superuser and create multiple schemas and databases per cluster.

Whats next?

Now that you have a highly available PostgreSQL cluster running, the heavy lifting is already done. Nevertheless, there are some imporant steps to continue.

Summary

This guide introduced CloudNativePG - a state-of-the-art kubernetes operator to manage a highly available PostgreSQL cluster, with auto-failover, backups etc. - all one needs for modern PostgreSQL operations.

Setting up a PostgreSQL cluster is done as follows:

-

Install the cnpg plugin:

kubectl krew install cnpg -

Generate the operator manifests:

kubectl cnpg install generate -n devops-system --replicas 3 > operator-manifests.yaml -

Optional: If you want, adjust the operator manifests for node tolerations or node affinity

-

Apply the operator:

kubectl apply -f operator-manifests.yaml -

Create your cluster manifest file:

1apiVersion: v12type: kubernetes.io/basic-auth3kind: Secret4data:5 password: c2VjcmV0X3Bhc3N3b3Jk #secret_password6 username: YXBwX3VzZXI= #app_user7metadata:8 name: example-app-user9 namespace: postgres-namespace10---11apiVersion: v112kind: Secret13type: kubernetes.io/basic-auth14data:15 password: c2VjcmV0X3Bhc3N3b3Jk #secret_password16 username: cG9zdGdyZXM= #postgres - Note: It NEEDs to be postgres17metadata:18 name: example-superuser19 namespace: postgres-namespace20---21apiVersion: postgresql.cnpg.io/v122kind: Cluster23metadata:24 name: example-cluster25 namespace: postgres-namespace26spec:27 description: "DevOps and more cluster"28 imageName: ghcr.io/cloudnative-pg/postgresql:15.129 instances: 330 startDelay: 3031 stopDelay: 10032 primaryUpdateStrategy: unsupervised3334 postgresql:35 parameters:36 max_connections: 10037 shared_buffers: 2560MB38 effective_cache_size: 7680MB39 maintenance_work_mem: 640MB40 checkpoint_completion_target: 0.941 wal_buffers: 16MB42 default_statistics_target: 10043 random_page_cost: 1.144 effective_io_concurrency: 20045 work_mem: 13107kB46 min_wal_size: 1GB47 max_wal_size: 4GB48 max_worker_processes: 449 max_parallel_workers_per_gather: 250 max_parallel_workers: 451 max_parallel_maintenance_workers: 252 pg_stat_statements.max: '10000'53 pg_stat_statements.track: all54 auto_explain.log_min_duration: '5s'55 pg_hba:56 - host all all 10.244.0.0/16 scram-sha-2565758 bootstrap:59 initdb:60 database: app61 owner: app_user62 secret:63 name: example-app-user64 # Alternative bootstrap method: start from a backup65 #recovery:66 # backup:67 # name: backup-example6869 superuserSecret:70 name: example-superuser71 storage:72 pvcTemplate:73 accessModes:74 - ReadWriteOnce75 resources:76 requests:77 storage: 10Gi78 volumeMode: Filesystem7980 resources:81 requests:82 memory: "1Gi"83 cpu: "1"84 limits:85 memory: "10Gi"8687 # # Optional setting: Only deploy on worker nodes88 # nodeSelector:89 # kubernetes.io/role: "worker"9091 nodeMaintenanceWindow:92 inProgress: false93 reusePVC: true -

Apply the manifest with

kubectl apply -f <manifest-filename>.yaml -

Check your cluster status with

kubectl cnpg status -n postgres-namespace example-cluster -v

------------------

Interested in how to train your very own Large Language Model?

We prepared a well-researched guide for how to use the latest advancements in Open Source technology to fine-tune your own LLM. This has many advantages like:

- Cost control

- Data privacy

- Excellent performance - adjusted specifically for your intended use