How to sync data from Senseforce (Paze) to local CSV with Airbyte

In this blog post we are going to discover what Airbyte is, what Senseforce is and how we can utilize Airbyte to sync any dataset from Senseforce to a local csv file.

- What is Airbyte

- What is Senseforce

- Prerequisites

- Prepare a Dataset in Senseforce

- Configuring the Airbyte Connector

- Starting the Sync

- Advantages of Airbyte for Senseforce data syncs

- Summary

What is Airbyte

In simple terms, Airbyte is a system made for integrating data. More specifically, it is tailored to excel at ELT - use cases. Meaning, they are extremely good in extracting and loading data and then storing them in another database or system. Why are they so good?

- They have a ton of free and ready-to-use connectors. So there is a good chance that the system you want to integrate already is covered by Airbyte.

- They have by far THE best connector building SKD. If the system of your choice is not available as a connector - it's really easy to create new connectors (which is also the reason they have so many of them)

- Awesome, helpful and active community. You know who built a lot of the Airbyte-Connectors? Well, the community. Airbyte is very much focused on it's community and they invest heavily in building and maintaining an active community. They are even paying active community members.

And what are they offering?

- As mentioned, a lot of connectors out-of-the-box and a great connector builder SKD

- Scheduling to automatically trigger syncs

- Logging and Monitoring of connectors

- Incremental updates (no need to sync ALL data with every sync. Airbyte will maintain a state)

- Pagination

- Authentication

- Stream Slicing (If you have a lot of data to sync, Airbyte automatically divides the API requests into chunks of data - not overloading the source APIs)

All in all, Airbyte is one of the best, if not the best solution to integrate modern systems into a modern data stack.

How Airbyte markets themselves (source: https://airbyte.com)

How Airbyte markets themselves (source: https://airbyte.com)

What is Senseforce

NOTE: As per time of this writing Senseforce was re-branded to Paze.Industries. As many still know them with their previous name, I'll call them Senseforce, but update this post once the new brand is established. Congrats to the re-branding by the way.

Senseforce/Paze.industries - in it's core - is a low-code IoT solution, targeted for the machine industry. Senseforce excels in out-of-the-box feature-completeness. They provide a wide array of features needed for big-tech IoT installations - they make it relatively easy to connect a machine, gather data and create feature-rich data applications in their cloud platform and cover the full lifecycle of a machine when it comes to the machine's data.

Among others, they offer the following key features:

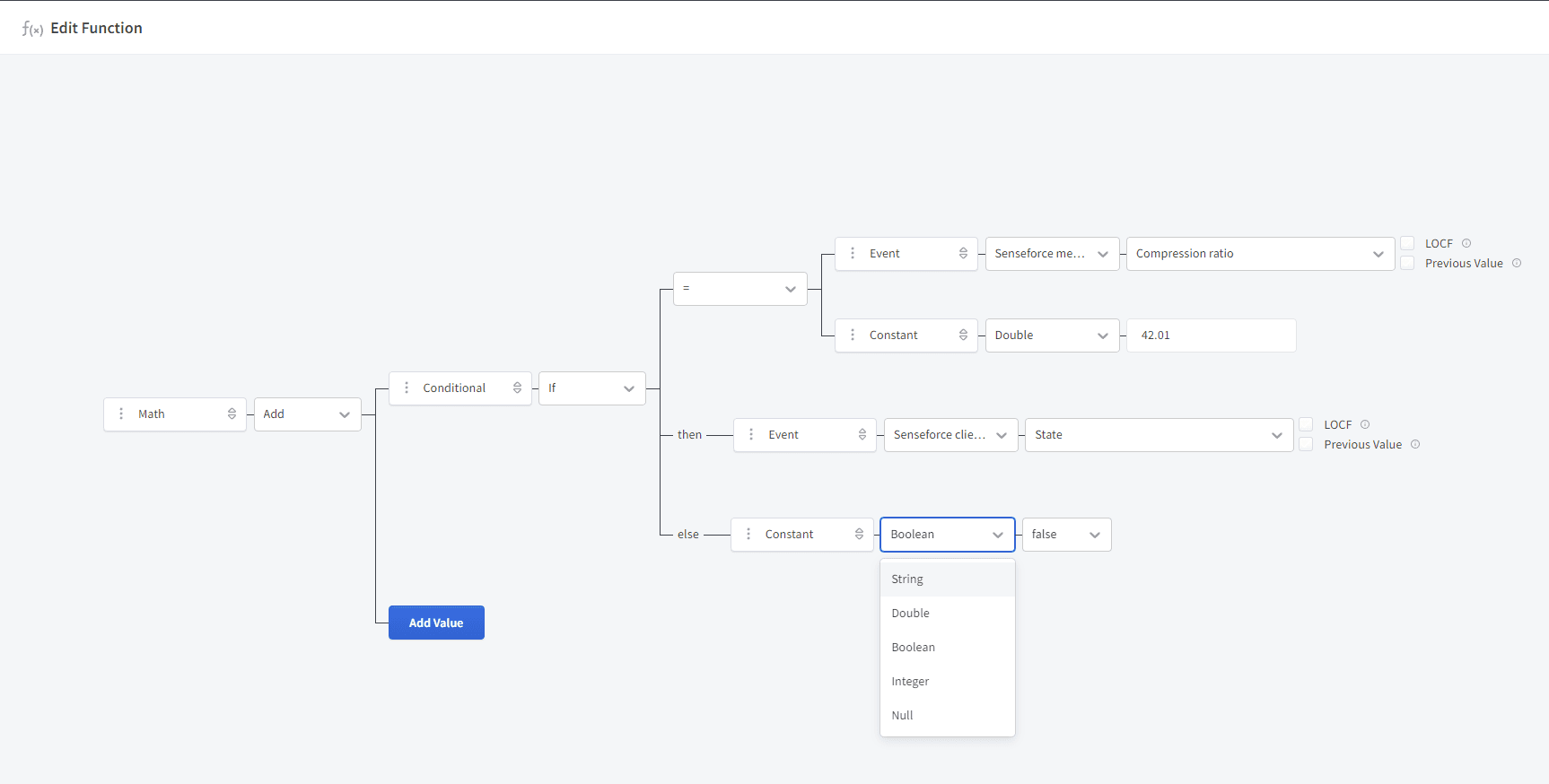

- Low-Code query builder. It's one of the feature-richest low-code SQL query builders out there

- Script integrations: Besides low-code queries, one can also extend their data analytics with scripts

- Dashboards

- Edge Device Remote Management: They provide secure remote accessibility to any connected remote device

- Virtual Events: Sort of data Transformations

- Scheduling and Triggers: Create Events and Actions based on your data

- User/Groups: Senseforce was made to accommodate tenants and clients of tenants, meaning they provide very detailed user and group management capabilities

And thankfully they also offer a REST-API, which we are going to use to sync their data from.

Senseforce low-code query builder

Senseforce low-code query builder

Prerequisites

To follow this guide, you need:

- git and docker installed

- a Senseforce user account which can create datasets

Prepare a Dataset in Senseforce

As all Airbyte - Connectors, also the Senseforce Airbyte connector provides a great starting point for how to get started. See the airbyte docs for the introduction. Nevertheless, let's start from scratch here.

To use Airbyte to sync some data from Senseforce, we first need to define what data we want to extract.

-

Create a new Dataset

Create new Dataset

Create new Dataset -

Add the columns you want to sync by clicking on the data attributes in the "Add Data" section

Senseforce Add Data Dialog

Senseforce Add Data DialogImportant: You definitely need to add the "Timestamp", "Thing" and "Id" column of the "Metadata" section. This is needed so that Airbyte can provide the Stream Slicing and Incremental Sync features.

In our example we are interested in the "Uncompressed size" and the "Inserted Events" metrics - see the above Screenshot for reference. But feel free to add any columns you like - as long as you keep Thing, Timestamp and Id in the dataset.

-

Give the Dataset a nice name and save.

Senseforce save the dataset

Senseforce save the dataset -

Navigate to your user profile and create an API token

Senseforce Create an API Token

Senseforce Create an API TokenMake sure to not down this token as you will need it later in the Airbyte configuration.

NOTE: That's it. Your Senseforce installation is ready to export the defined dataset.

Configuring the Airbyte Connector

Conveniently for us, Airbyte already provides a Senseforce Source Connector - meaning we have an easy time and can use the user interface of Airbyte to configure our data extraction.

-

Clone the Airbyte Github Repository to your local machine by running:

1git clone https://github.com/airbytehq/airbyte.gitThis will download the Airbyte source code and provides a convenient way for us to spin up an on-demand airbyte instance on our local machine.

NOTE: Alternatively, you can also host airbyte on a remote server - but that's a story for another time.

-

Run the following commands to run Airbyte on your local machine.

1cd airbyte2docker compose upWait until the following output is shown in your terminal:

1 ___ _ __ __2 / | (_)____/ /_ __ __/ /____3 / /| | / / ___/ __ \/ / / / __/ _ \4 / ___ |/ / / / /_/ / /_/ / /_/ __/5 /_/ |_/_/_/ /_.___/\__, /\__/\___/6 /____/7 --------------------------------------8 Now ready at http://localhost:8000/9 --------------------------------------10 Version: 0.40.2311 -

With your browser, navigate to

http://localhost:8000. The default username isairbyteand password ispassword. Complete the sign-up steps and will arrive at a page similar to the one below. Airbyte Start-Page

Airbyte Start-Page -

Click on "Create your first Connection" and select "Senseforce" from the Airbyte Dropdown menu

Airbyte source selection

Airbyte source selection -

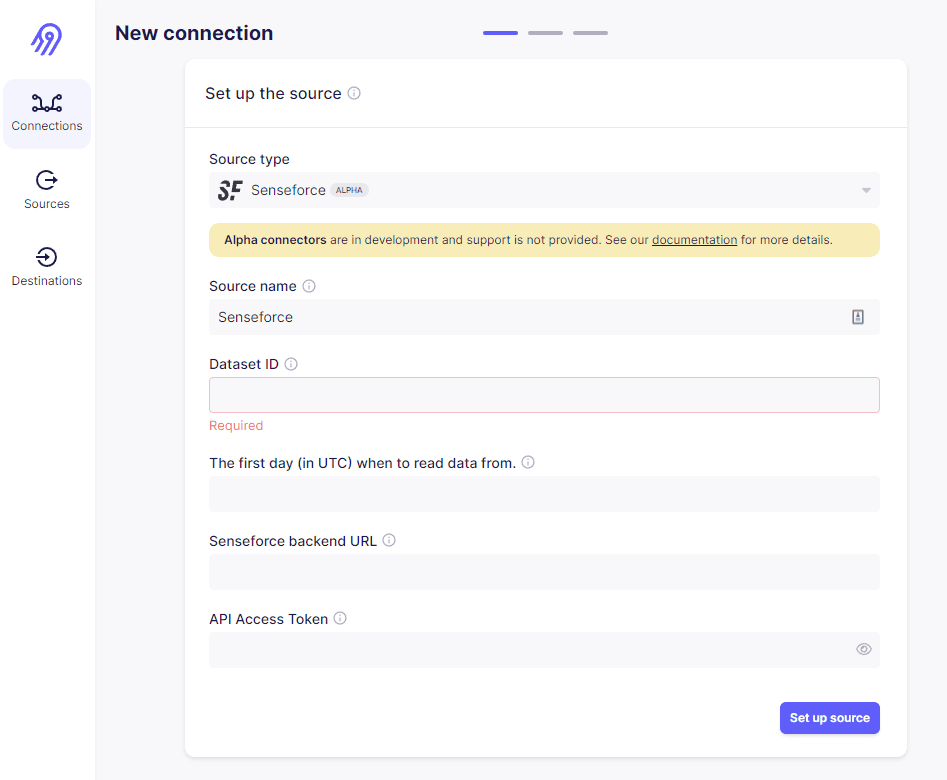

You will arrive at the following screen:

Airbyte connect to config screen

Airbyte connect to config screenAdd the information as follows:

-

Source name: Display name of this source in your airbyte instance. Can be any name.

-

Dataset ID: Id of your Senseforce dataset. This is the last part of your dataset-url in Senseforce

Senseforce Dataset Id

Senseforce Dataset Id -

The first day (in UTC) when to read data from: As the name implies.

-

Senseforce backend URL: The URL of your Senseforce backend. Easiest way to find your backend URL is to log out from your Senseforce profile. In the login Screen, you see the backend-url. It's simply the domain of the login screen.

Senseforce backend url

Senseforce backend url -

API Access Token: Enter the access token you created in the previous step.

Click

Set up sourceafterwards. -

-

The next screen will ask you to select a Destination:

Airbyte Destination selection screen

Airbyte Destination selection screenThere you may select where to send your data to. In our example, we want to select

Local CSVto store the exports to a local CSV file. In the next screen, enter:- Destination name: Name of this Destination in your Airbyte instance. This can be any name.

- destination_path: Airbyte adds all files to a local folder mount which can - by default - be found in your locals host folder

/tmp/airbyte_local. The settingdestination_paththerefore needs to be relative to/local. A possible example is/local/export. Note: This setting defines the directory where your files are placed - not the filename itself.

-

Click "Set up Destination".

-

The next screen is the "Connection" screen, allowing you to configure, how to sync data between Senseforce and your local csv. You might hover over all the information-symbols to get an easy-to-understand description of what exactly we are setting. To finish the configuration, adjust the next screen as follows and click on "Set up connection".

Airbyte Senseforce tLocal CSV connection configuration

Airbyte Senseforce tLocal CSV connection configuration- Connection Name: Name of the connection in your Airbyte instance. Can be any name.

- Replication frequency: Airbyte provides a powerful scheduler. If you want, you can set this to eg. 30 minutes to schedule a data sync every 30 minutes. We are interested in one-time-sync only, therefore we set it to "Manual".

- Namespace: This defines the name of our resulting file. It will be called

exportin our example. - Sync mode: This defines how Airbyte syncs data from source to destination. Options are either "Incremental" or "Full Refresh". A "Full Refresh" always syncs all data. For Incremental Syncs, Airbyte stores a state variable - which in case of the Senseforce connector is the Timestamp of your Dataset. When you attempt to sync this source the next time, Airbyte will look up this state variable and continue syncing from the last successfully synced timestamp.

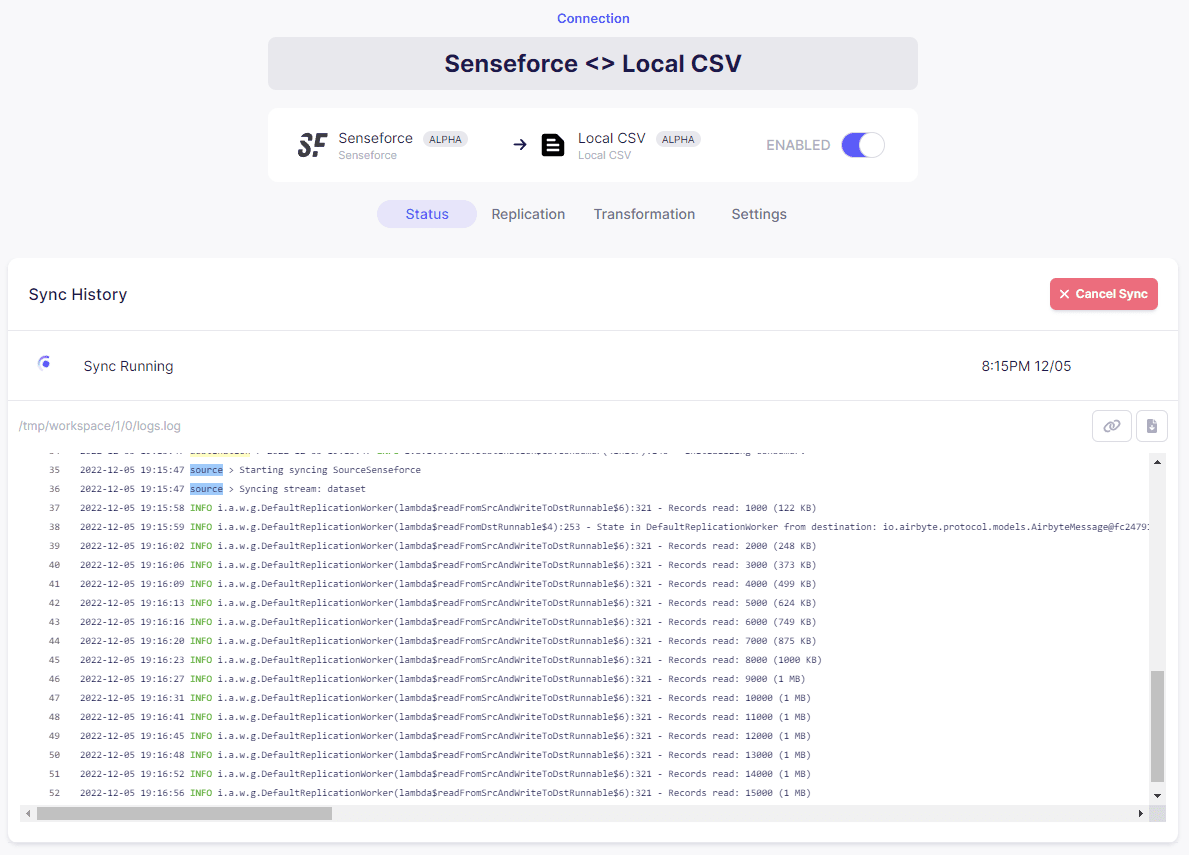

Starting the Sync

You are now read for your first data extraction. In the connection screen where you ended up after configuring your connector, click Sync now to start your first source synchronization. You might click on the "Sync Running" button to open the Logs and see whats happening. In the below example we see, that the connector is currently working and reading thousands of records.

Airbyte sync logs

Airbyte sync logs

Wait until the sync is finished. You can find your exported CSV file in the the /tmp/airbyte-local directory on your host machine.

Advantages of Airbyte for Senseforce data syncs

Ok, we did all this connector configuration work - but what did we gain - compared to directly using the Senseforce API? Actually quite a lot:

- We can use the configured connector to sync Senseforce data to any other supported Destination. Like PostgreSQL, Snowflake or even MQTT.

- The Senseforce API is quite powerful, but also quite complex. It allows filtering and pagination. Airbyte handles all of that for us.

- It paginates data to never fetch more than the Senseforce-supported API limits.

- It implements backoff and retries to handle API rate limits.

- And most importantly: Airbyte uses Stream Slices to fetch small chunks of data. So if you want to fetch data for let's say 5 years - Airbyte intelligently filters the Dataset to only include one day worth of data. This ensures to prevent any timeouts and API overloads.

- We can start scheduling this connection - to automatically get the latest amount of data. We can combine this with "Incremental Syncs" to extract the most important information from Senseforce in a cost- and time-efficient manner.

Summary

We have seen, that Airbyte makes it easy to extract data even from very powerful APIs like the Senseforce APIs. It helps with features like Incremental Syncs, Retries, Backoff strategies, pagination and Stream Slices.

We also saw the easy-to-use Senseforce query builder and how convenient we can create our Datasets which we subsequently use in one of our downstream systems. By utilizing a simple CSV export, we enable ourselves to do some Data Analytics on our local machine.

Senseforce, with it's very targeted machine industry low-code-tool seems perfect to collect, manage and prepare our data with very little to now IT/Data Science know how required. In combination with Airbyte, one can enable their companies Data Scientists to perform further Analyses, Machine Learning and more.

------------------

Interested in how to train your very own Large Language Model?

We prepared a well-researched guide for how to use the latest advancements in Open Source technology to fine-tune your own LLM. This has many advantages like:

- Cost control

- Data privacy

- Excellent performance - adjusted specifically for your intended use