What is a modern data warehouse and why do you need one?

This post is going to highlight the reason why I highly suggest any company - no matter how big or small - to invest in some form of data warehouse. You are going to learn why that is and also what a data warehouse is. Furthermore, the post will highlight the main components of a data warehouse and potential implementations for these components.

In the final section, I'm going to showcase a real world example of before and after data warehouse data analytics performance.

Why do we need a data warehouse?

For almost two decades now we are intrigued by the sheer amount and promised possibilities of data. We invest in data teams, data tools and data projects - to find, that our expectations were way too big.

We tried to tackle this problem with various methods - and between 2010 and 2022 there was a huge focus on tools. We need more tools which focus on data and we need more data from these tools. Whole ecosystems emerged. And there were some successes. Mainly when starting to implement one or two tools. But these successes quickly vanished when introducing more tools, more analysts and more analytic requests.

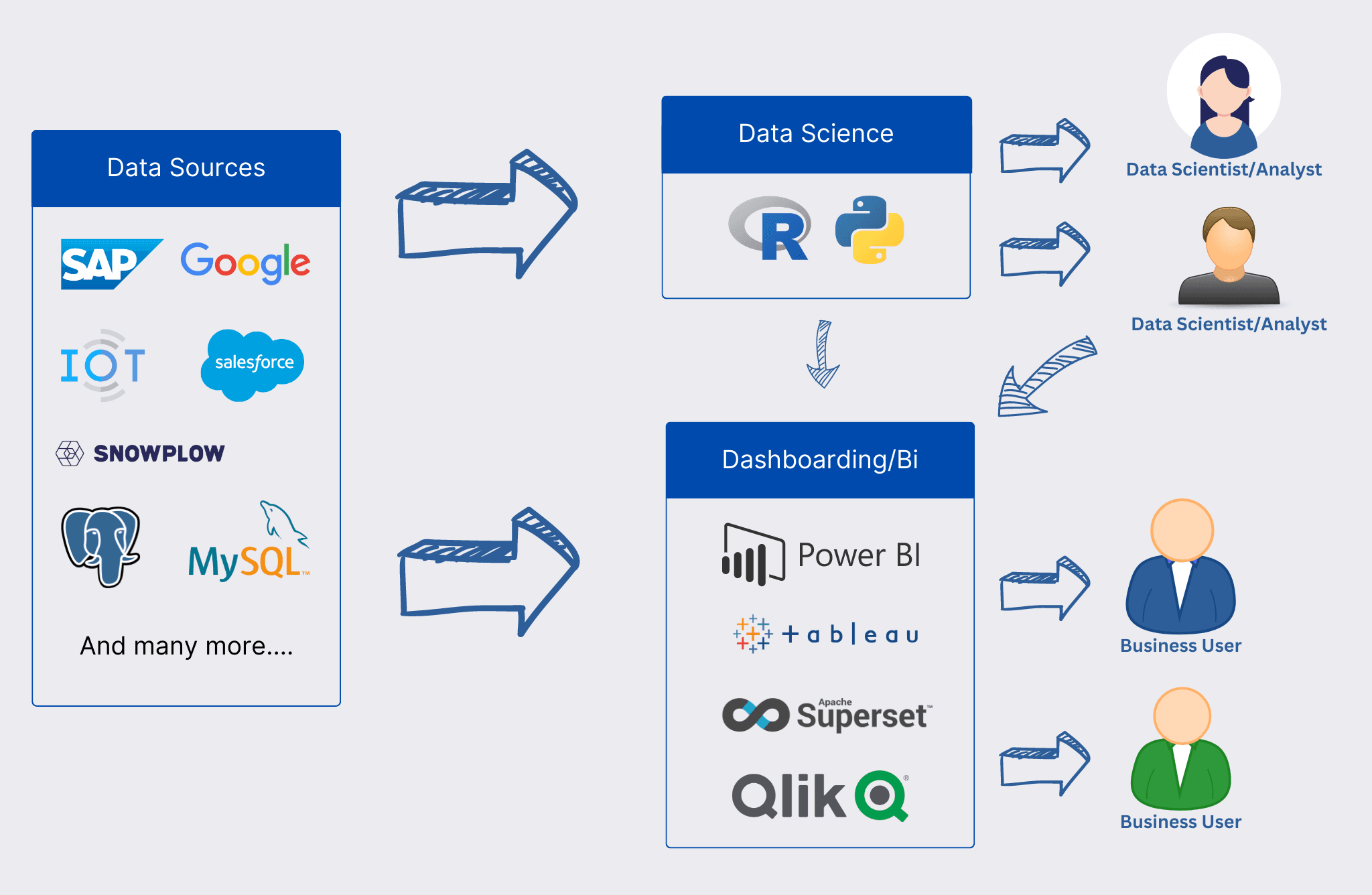

But why did this happen? The issue is best shown with the image below in mind.

Initial data setup of almost any company starting to work with data

Initial data setup of almost any company starting to work with data

First, we have a plethora of tools available - which all serve their purpose. We need an ERP system and the data of such a system. We need a CRM system. We need an IoT system, we need a web-tracking system, etc. etc. Having a lot of tools is not the issue in itself. The issue starts then later on.

Secondly, we also have quite a lot of analytics tools and possibilities. Our data scientists might like to use R and Python to create in-depth analyses of certain areas. Our analysts want will use one or even many of the huge amount of dashboarding, reporting and BI tools to create wonderful and beautiful dashboards. Also here, the problem is not the usage of such tools. Our scientists need Python, our analysts need a variety of dashboarding tools. The issue comes later.

And third, we have our business users which consume the reports, analyses and dashboards of our scientists and analysts.

So, where is the problem? The problem is in the connection of all of these elements. Our analysts directly connect their R, Python or BI Tools to our data sources. This introduces quite a lot of issues:

- With each new analyses, report or machine learning model, we need to work on data integration and data cleansing - some of the most annoying and time consuming tasks in data science and analytics.

- What if the API only eg. allows to consume data for the last 30 days? Or a year? Or two years? We are inherently limited to the amount of historical data we can use for our purposes.

- APIs are changed all the time. Some are better managed, some worse. But it's a fact, that someday the data of your API will change, breaking your integration. You might recognize this only some days later - and will unavoidably experience loss of data.

- There is no incremental value generation when integrating our data into reports and models. We integrate the data, clean them, create an ad-hoc data model. Next time we have to repeat these steps - never being able to build on what we already did in the past.

- Our analysts and scientists need to fight with an ever-growing amount of different APIs and API flavour - they are basically API experts rather than data experts.

- Have you experienced the situation, that data teams are quite fast at the beginning, but then tend to get slower over time? Well, this is due to the fact that they increase the amount of integrations and reports - but the whole system is not scalable.

Subsequently we generate additional issues which are directly obvious to our business users:

- If Susan and Andy are individually asked to create a report, both of them will almost always end up integrating the required data source, clean the data and create the report as they think is best to fulfill the request. However, in almost all cases both will end up with a different number for the same question. Why? Because there is a huge amount of flexibility in interpreting business questions. In our system, there are no guard rails against these interpretations. This will erode trust of the business users - because they can't (and should not) relate to the fact that both answers might be correct - they expect and need one answer. If they get many, they will inevitably end up doubting the results of our reports.

- The Dashboards will get slow. Really slow. Why? Because for most APIs we can only consume raw data or slowly aggregated report data. Each time our users open a dashboard, we will hit the API, request a bunch of raw data, do some transformation, some analytics and then only display our dashboard or chart. There is no way, this can scale.

- Change Request are very time consuming. Let's say we perfectly integrated the data sources for our analyses and even managed to make the dashboard quite fast. Our CEO likes the report, but wants one additional thing (because they always do, right? :-) ). We often need to start from scratch due to the un-scalable nature of our data integration and ad-hoc analyses.

These are by the way the main reasons where the business looses all the enthusiasm for data. When data can't be trusted, when dashboards and reports are way too slow and when requests for change cost thousands of dollars.

To summarize this chapter:

- We have a lot of data sources - which is unavoidable and actually a good thing.

- Each time we attempt to create a new analyses, report or dashboard, we need to integrate and clean the relevant API data

- This system can't scale and will result in huge frustration on the business user side as well as the analysts/scientists side due to:

- Annoying and repetitive "data plumbing"

- Missing trust in data

- Slow dashboards

- Long cycle times for new analytics and requests for change

- All the tools we use created data silos which we need to access individually

What is a data warehouse?

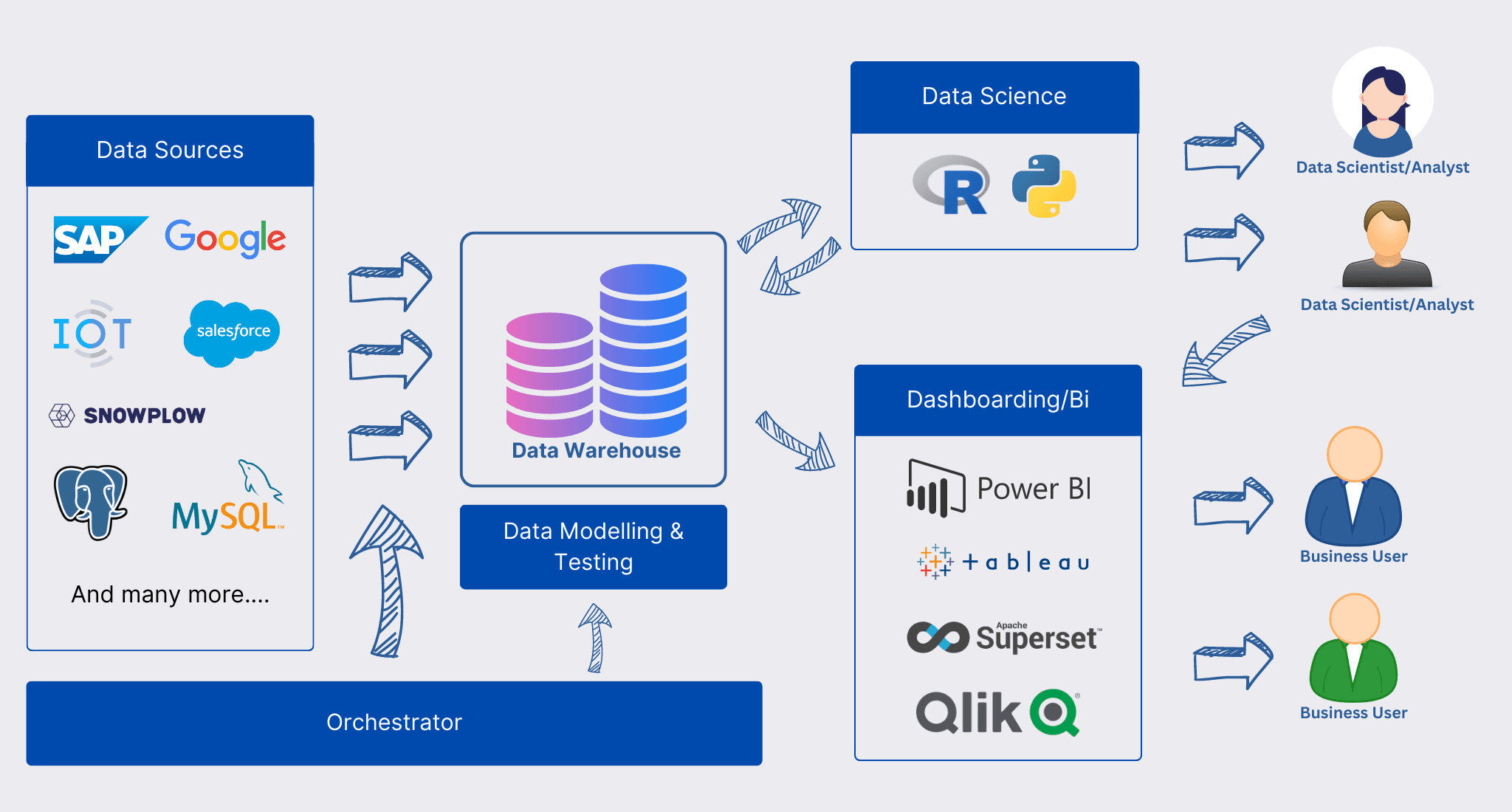

One architecture which actually solves almost all of the above mentioned issues is a data warehouse. A data warehouse is - in it's most basic form - nothing more than a central store for all of your data. A centralized database if you like. To see how this helps, let's have a look at the following illustration.

Data integration pipeline with data warehouse

Data integration pipeline with data warehouse

Compared to our first architectural sketch, we introduced three new elements:

- The core data warehouse itself

- Data Modelling & Testing block

- An orchestrator

Other than that, we still have a very similar situation. Lot of data sources, lot of tools and people which want to use this data sources.

But these three new elements introduce drastic changes in how our analysts, scientists and users can utilize the data during daily business. Let me introduce each of the blocks in more details.

Core data warehouse

The core data warehouse is the main centralized storage for all your data. To be more specific, the core warehouse is nothing more than a data storage - either a database like Snowflake or Google BigQuery or an object store like AWS S3 or Google GCS.

Any data which is related to your business will reside in this central store. This is one of the main paradigm shifts your teams may encounter: It shall be highly discouraged to use ANY data which is not part of the data warehouse. If we need to analyze new data sources - first integrate them in the data warehouse, then analyze them. We'll see later why.

The main advantage of having all data in one place is quite obvious: Instead of needing to use potentially tens or hundreds of APIs of all our data sources, our analysts and scientists suddenly only need to use one single API - and have access to all available data. This drastically reduces the amount of time and money needed for new reports, dashboards and models.

Most modern data warehouses even use SQL as their primary interface language - a language which data analysts and scientists know very well and love.

One important aspect of this core data warehouse is it's ability, to store the integrated API data in their rawest format. For most APIs this is a JSON string, but might as well be XML. Why is this important? We want to make sure that our integration process (see next chapter) is able to save the API data in the database - no matter whether the API changes the response, we want to be able to take these data and store them in our data store. This has two advantageous:

- We avoid data loss in case the API data changes. If we simply store our API data in a raw blob-like storage area, we can be sure, that we can save the data.

- We have all historical data for later reprocessing. Let's say we find a mistake in our subsequent data models. If we have all raw data available, we can simply run a recalculation. If we don't have the raw data, we might never have the chance to correct the mistake.

Additional requirements for this data store:

- It needs to cater for huge amounts of data. Do not underestimate the amount of data you will collect. Even if you are a small business. 1 TB is quickly amassed.

- Ideally we have rich analytical SQL capabilities

- The warehouse should be a great fit for analytical queries. Insert performance or minimal query latency is not that important. A huge analytical feature set and general good analytical query performance are things to look for.

- The technology needs to scale well for both price and query performance.

- You want a quite cheap system when having little data.

- When analyzing more data (> 1TB), you want to have the possibility to use hundreds or even thousands of CPUs - without you needing to manage the underlying infrastructure.

- Furthermore, you want to have mechanism to control and limit the amount of money spent on heavy BigQuery

- The data warehouse needs to provide data access possibilities to define and limit who can see which data. GDPR etc.

Terminology: Data experts often discuss, whether a data warehouse, a data lake or a lakehouse are the appropriate form for this central data storage. To be honest - for me this discussion is rather academic than practical. In theory, a data warehouse is more like a traditional database - for very structured data. A data lake is blob-store like storage where you can simply drop any data - from JSON to videos. A lakehouse is the combination of both of them. Why am I not interested in this discussion? Because all 3 of these theoretically different concepts actually only differ in minor details. What we need is a centralized data store, which can integrate any data we are interested in, in it's raw format. And all 3 of the definitions fulfill this requirement. And funnily enough, you can easily extend modern data warehouse for blob storage capabilities and data lakes for database capabilities and lakehouses in each of the two directions. So really nothing to worry about too much.

Examples for good data warehouses:

-

- Very cheap for little data

- Very performant and hands off - scales to thousands of CPUs without you noticing anything

- Quite expensive if you don't be careful

- Good analytical SQL capabilities

- Allows for limiting the amount spent to prevent huge bills

- Google Cloud only

-

- Allows for fine grained cost control - as you decide on the amount of compute you want to consume

- Easy to scale - but it needs manual interaction to scale

- Costs are not as transparent as for BigQuery

- Best-in-class SQL analytics capabilities

- Superb performance

- Runs on AWS, Azure and Google Cloud Platform

-

- Open Source - therefore runs where ever you like

- Good query performance

- Scales quite well for big datasets

-

- Use this option, if you desperately need to use Amazon services

- Not a bad warehouse, but in almost all regards worse then BigQuery and Snowflake

- AWS only

-

- Enterprise-grade data warehouse

- All-in-one platform for data warehousing, ai, spark etc.

- For huge companies, the platform ecosystem is great. For small and medium companies, having and managing the whole databricks platform is overwhelming and often not necessary.

-

GCS/S3

- Blob Storage on Amazon or Google Cloud Platform for storing unstructured data

- BigQuery and Snowflake can query data from the blog storage

Note: The more experienced data experts might note, that data warehouses were actually already introduced in the 1980s. While the central idea still stays the same - having all data available in a central storage - the modern approach is quite different. First, we set on high scalability of - mostly - cloud vendors. In the 80s, there were data marts - "smaller" parts of a data warehouse which form one big warehouse. In modern warehouses we have ONE big warehouse. No marts. Also the process of ELT (see next chapter) is rather new. But still - while there are quite some modernizations on data warehouses it is remarkable that already the 1980s had similar issues we have in our apparently golden time for data.

Integrating data using Extract, Load, Transform (ELT)

After having the centralized store ready, we need a way to integrate our data. The huge advantage compared to the beginner-architecture in chapter one is, that we only need to do the integration work once - not every single time we create a report. Furthermore, having a data store as our integration target, we can implement more elaborate integration patterns - like hourly integrating data from the last hour, etc. Instead of always loading what we need at the moment.

In previous times, ETL processes were used for integration purposes. Extract, Transform, Load. This would mean we load the data from the API, then transform them and then store them in our database. Due to the highly scalable and quite inexpensive nature of modern data warehouses, this process changed to ELT - Extract, Load, Transform. Meaning, we load the data from our APIs and store them in our database in their raw format. Only after that we are going to transform them into our data models.

Many advantages arise:

- Having the raw data in our data store allows for later re-calculating some data models

- We have a much higher chance to actually insert the data into our store (as we don't care about changes in the API response structure)

- ELT is generally faster, as we "only" need to load the data and store them in the database

- Also the transformation step (which is described in the next step) is generally faster, as it scales very well - especially in cloud infrastructures

- Maintenance is generally speaking lower, as the complexity of ELT is lower then ETL.

For an in-depth, but still short comparison of ETL vs ELT, see this post from Snowflake.

Summarizing, the ELT tool is the one thing which accesses our data and stores them in their raw format in our core warehouse.

Therefore, this tool needs the following qualities:

- It should provide out of the box connectors for the APIs we need to access - or at least provide easy ways to creating connectors. We don't want to create data integrations for our APIs from scratch.

- The tool allows to filter our APIs based on eg. timestamp or categories

- The ELT process remembers, up to which time or index the last sync was executed - and may continue from this point onward. Eg. if the last sync was done for data up until 12:00 am today, the next sync should only fetch data starting at 12:00 am.

- We need retries and logging

- Stream slicing is nice to have as well: Stream slicing divides the API requests into smaller pieces of data - let's say you want to sync data from last week - the API might get overloaded if you fetch the whole week. Good ELT tools are able to divide the requests in eg. 7 requests - one per day of the week

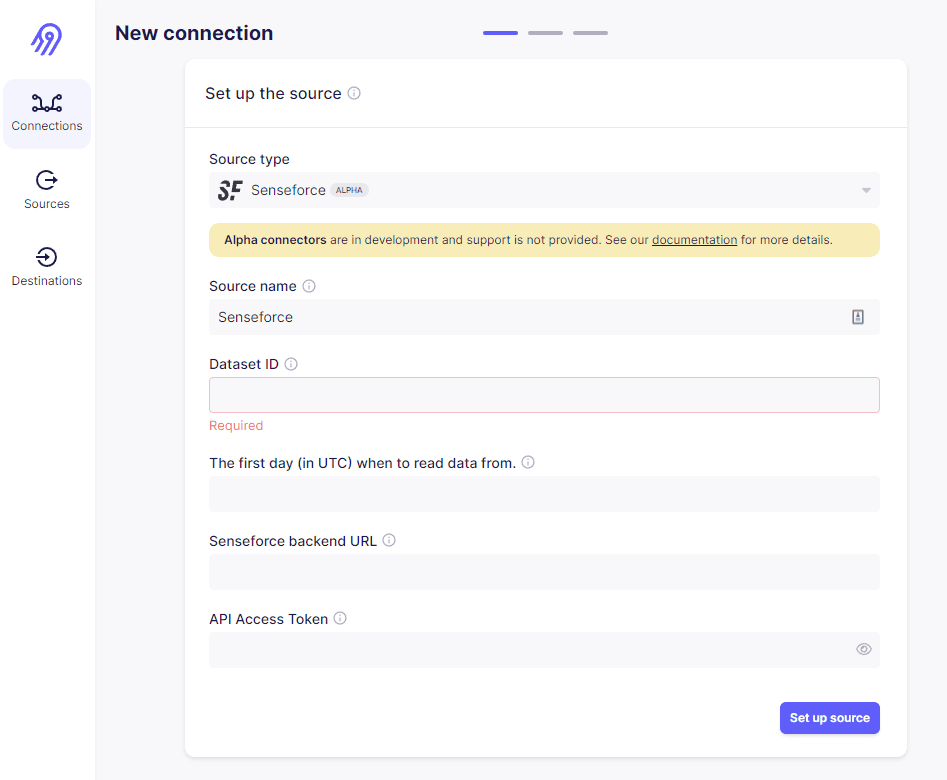

One of the most exciting tools currently available is Airbyte. It's an open source ELT tool with the most ready-made connectors on the market (as of time of this writing). They invested heavily in a great community and the by far best connector SDK out there. The huge and lovely community used the connector SDK and simply built connectors for any tool which is not too unknown. If you still have an API which is not covered by the vast connector catalogue, you can use their low-code connector SDK and create an integration yourself - I promise it's way easier than to create an integration from scratch.

Using the connectors in Airbyte is as easy as it gets. They provide a clean user interface which allows you to enter credentials and relevant settings - like sync frequency or how you want to slice the requests.

Airbyte connector config screen

Airbyte connector config screen

While Airbyte is most probably one of the best tools out there at the moment, using one of these tools is also not a mistake:

- Fivetran

- Stitch

- Hevo Data

I would heavily suggest to not use legacy enterprise ETL tools - especially the ones which call themselves low-code. Low-code enterprise tools before 2015 were just a different kind of low code...

Transforming and Modelling your data

After we have integrated our data in their raw format, we need to actually use them. Remember, up until now they are just blobs of eg. JSON or XML. Modelling our data "simply" means extracting the relevant data and fields from our blobs and storing them in real data warehouse tables or views - meaning that our analysts and scientists or BI tools can query them using SQL (basically any other query language works as well - but most of the modern data warehouses use SQL).

We can either use the means our data warehouse provides to model the date - or better - we use a tool like dbt. That being said - not using dbt is a huge mistake as it is very lightweight, open source and provides many features which make data modelling a breeze:

- All your data models are version controlled

- Jinja templating allows to create complex, but easy to maintain models

- Fine-grained control over the nature of the model: table, view, materialized view, etc.

- Define how to create the model from your source - either re-calculating the model each time for the whole relevant time frame or incrementally extend the model

- It allows to easily create packages which you can reuse across your potentially many data models

The data modelling step is the step which helps us to mitigate long-loading dashboards. Databases in the 2010s promised, that they are able to provide realtime reports on huge amounts of raw data. Unfortunately, the amount of data grew faster than the databases were able to scale. Meaning, we need to aggregate and filter the data we present to the end user. Using dbt-based data models allows you prepare the data in a way that the reporting or dashboarding tool only needs to display ready-made data - not doing any heavy workloads.

Some tips for data modelling:

- Use many models. Storage is very cheap nowadays. It's not critical to have only a little amount of models - however having many very dedicated models helps to actually maintain them. Because all your models have one purpose - compared to multiple purposes

- Clearly document your data models. As you will end up having many models, make sure anybody involved knows exactly what each model is about. dbt provides very good documentation capabilities - allowing to document each data model down to the smallest data point

- Use dbt grants to define who can access which of your data models

- Don't be too worried about resources. Cloud warehouses use highly optimized query planners - thinking about the best possible way of how write a query is often less productive then submitting a suboptimal query and letting the query planner do it's thing

- Make sure develop guidelines with your team for which data models shall be used on the "final" model layer - meaning which data models are exposed for dashboards and reports. Combined with good documentation, this process reduces the risk of reporting two different numbers for more or less the same question.

Tests

Now that we have our data integrated and transformed in usable chunks of tables and views - we are ready to go and use them. Not so fast - actually there is one critical step before. I really encourage you to create tests on the data you use in production. Using dbt-expectations - an extension for dbt, we can easily define how we expect our data to be.

- Can the the data be null?

- Should the data be a date string?

- Should they be an E-Mail

- Do we expect them to be between 10 and 25?

- Do we expect them to have at least one value within the last hour?

- What data type do we expect our data point to be?

While this step might sound like a burden at first it really is a huge time and life saver later on. Integrating data and maintaining a high level of data quality is hard. Having data tests makes this drastically easy:

- You'll notice when an API changes quite early

- You'll notice when data are wrong. Suddenly have 97% clickthrough-rate? Don't get embarrassed by reporting this obviously wrong number to your management team ever again.

- You'll notice when someone screwed up. Mistakes are unavoidable - and not a problem if detected early on.

- This all in all increases the trust in your data. Just telling your users that you have tests will improve trust. Trust me ;-)

As dbt provides a great extension for these kinds of test, you can simply add tests to the same files which describe your data models. So not having tests is really not an option by now.

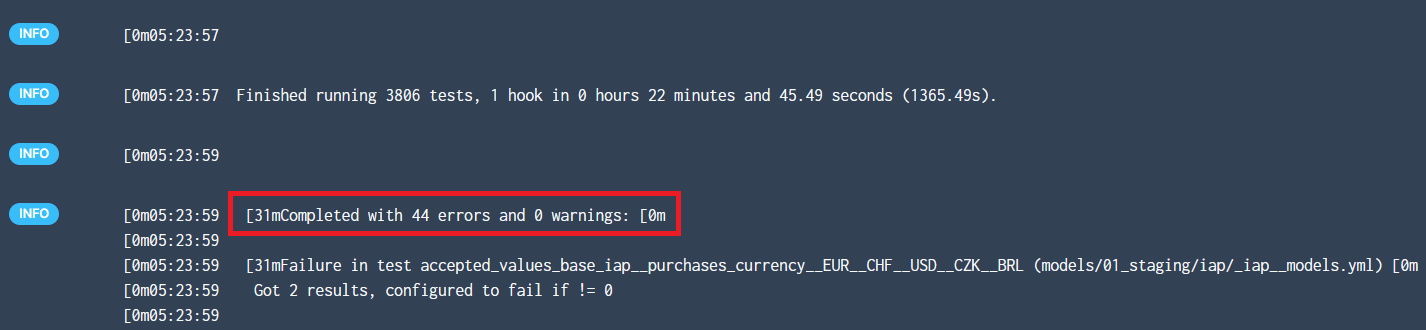

Disappointing but very informative dbt test run

Disappointing but very informative dbt test run

Orchestration

We are almost done - remember, we have our data storage, ways to integrate the data, ways to model data and ways to test that our data and morels are accurate. As you might see, these components need to somehow relate to each other with regards to execution time. I want to periodically integrate an API, then want to update a data model after successful integration, followed by a test run and maybe an additional data model update.

Having some cron jobs is simply not sufficient anymore - as you will end up having hundreds of jobs - some running in parallel and some having dependencies on previous ones.

For exactly this purpose data orchestration tools were invented.

Their main purpose is indeed nothing more than triggering other systems. Trigger our ELT to start an integration. Trigger dbt to create a model. Trigger dbt to execute a test. While they can also execute more complex tasks (eg. one of the best tools at the moment - prefect - is able to run any python script as orchestrated flow) - in a data warehouse scenario they are mainly used for triggering things.

While the main task indeed sounds very simple, there are actually some additional features we expect from a good data orchestration tool:

- Good logging and observability

- Easy to create workflows - best would be without learning a new programming language

- Highly flexible scheduler

- Notification system - for when a flow fails

- Good integration with our data warehouse tools

- Allows to create complex dependencies - eg. execute flow2 after flow1 succeeded, but run flow3 if flow1 fails

- Needs to respect IT security - meaning that we can't have (incoming) firewall ports opened if we want to integrate internal tools

There are several tools around, fulfilling most of the above requirements. The three most common are:

- Apache Airflow: The "classic" solution for workflow orchestration. Mature, but little rusty. Complex.

- Prefect: One of the best solutions at the moment. Ticks all the boxes. Great community. Open source.

- Dagster: End to end solution for orchestration with integrated development capabilities.

Real-world gains of using a data warehouse

While the above is all nice, what are actual real-world benefits of a data warehouse? The following achievements were implemented at a medium-sized company with a digital product and about 30 Mio. visitors per month.

- The loading time of one of the main dashboards of the product time was reduced by 200x (!). Instead of waiting several minutes, we reduced the waiting time to some seconds.

- After the management articulated trust-issues with the presented data, together with the data team we implemented strict data tests and guidelines for which metrics are to be reported to the management team. While we can't measure this success, the feedback was astonishing. The management team expressed their gratitude for feeling more heard and having better feelings when looking at the data.

- During the time of 21st December to 6th of January - so a rather quite period - the small data team was able to deliver 14 data reports needed for year-end-reporting. 14 reports in 2 weeks was something nobody could dream of before having the data warehouse. While this is nice in itself - it also highlights a business benefit: Analytics and reports suddenly got quite inexpensive!

- The team still struggles with bad APIs - however at least they recognize problems on their data providers side before they impact the actual reports

- Data are finally documented - allowing the data team to confidently explain why a certain number was as it is. Again increasing trust in the teams works

- The team finally has a foundation to consider working with advanced data science methods like ML and AI. Before having this tested, high-quality centralized data store, there is no way one can create high quality machine learning models

Summary

We have seen that when using data to solve business issue it is tempting (and good) to introduce more and more tools. However, having a good amount of tools also means having a good amount of APIs to fetch data from. A data warehouse allows to integrate all these APIs in a central data store. With modern data modelling tools like dbt, we can create high-quality, tested models which our analysts and data scientists can use. Instead of connecting to each API individually, they only need one interface - our data warehouse API. Most of the time this is SQL - a language data people like and know.

While setting up a data warehouse is nothing to be done in a day - the investment is worthwhile the effort. This is true for any company - there are guaranteed benefits for a data warehouse. No matter whether you are a big enterprise or a small startup. All of us have quite some data to analyze - and all of us can benefit from having them in a centralized store - easy to be accessed and with high quality. The quality aspect maybe being the most important of all.

Don't event start thinking about machine learning, if you don't have a data warehouse or similar centralized data structure.

Iot Icons erstellt von Freepik - Flaticon Data warehouse icons created by inipagistudio - Flaticon

------------------

Interested in how to train your very own Large Language Model?

We prepared a well-researched guide for how to use the latest advancements in Open Source technology to fine-tune your own LLM. This has many advantages like:

- Cost control

- Data privacy

- Excellent performance - adjusted specifically for your intended use