Uncovering the Power of Vector Databases with pgvector: An Introduction

Vector databases are currently all the hype. With the rise of large language AI models (LLMs), efficiently managing and searching large-scale, high-dimensional data has become a tremendously important use case. The solution to this challenge lies in vector databases – a powerful and increasingly popular data storage technology that enables faster and more accurate searches. In this blog post, we will demystify vector databases, discuss the reasons behind their growing popularity, and guide you through using pgvector – a versatile vector extension for PostgreSQL – to enhance your data management and search capabilities.

Table of contents

- What are vector databases?

- Use-Cases for vector databases

- Vector databases and Large Language Models

- pgvector - a PostgreSQL vector extension

- Bonus: pgvector on Timescale

- Summary

What are vector databases?

Vector databases have emerged as highly valuable in the world of data storage and search, proving to be especially beneficial for high-dimensional data. At their core, these databases store, manage, and index data in the form of vectors which are mathematical representations of abstract entities such as images, audio files, text, and other complex data types. For example, each word in a text can be represented as a single number - making it easy to store and even more so it's easy to work with by algorithms.

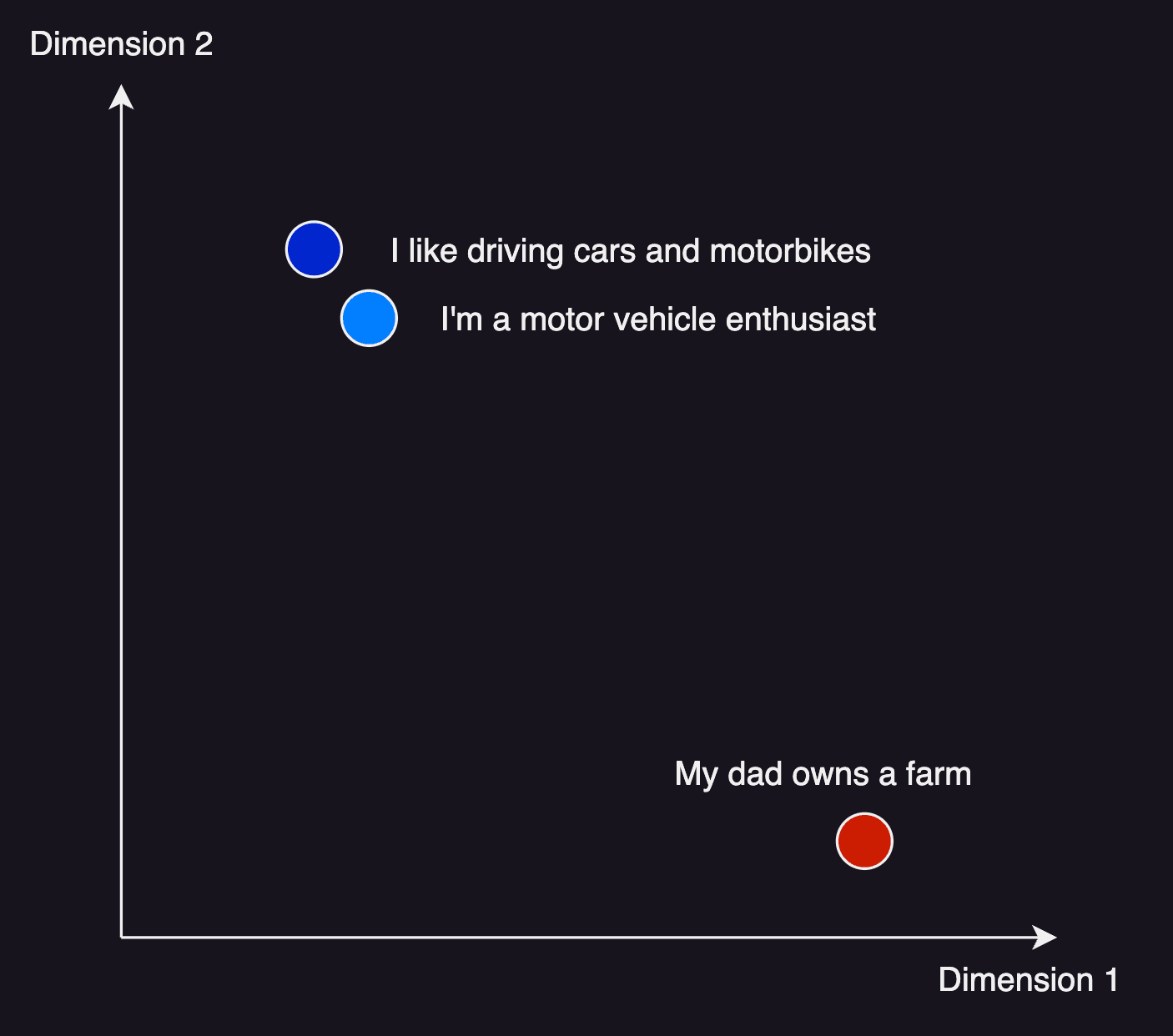

By exploiting the inherent properties of vectors, these databases facilitate efficient and precise searching and analysis of massive datasets. The main advantage of a vector database is it's capability to find similar entries. As an easy-to-understand example, if you have two sentences which both have similar meanings, they both will result in vectors with a short distance to each other. The vector database then helps you to find all the vectors which are close to each other.

A very easy example is shown below. While the two sentences at the top are quite similar in terms of their meaning, the one on the bottom is very different. Vector databases are able to encode such sentences into vectors and than find the ones which are close togehter - meaning they are similar.

vector similarity search

vector similarity search

Keep in mind that in real-world application we have way more than 2 dimensions - OpenAI embeddings currently use around 1500 dimensions for meaningful vectorization of language.

Now let's break down some of the key features of vector databases:

Vector representation: Converting complex data types into vectors allows higher-dimensional data to be represented as points in a multi-dimensional space. This representation is not only computationally efficient but also simplifies the process of comparing and relating data points.

Similarity-based search: Vector databases excel in searching for items that are similar to a given query, based on their vector representations. They use similarity metrics like Euclidean distance, cosine similarity, or Manhattan distance to determine the closeness between data points in the multi-dimensional space, which translates to finding the most relevant and similar results.

Scalability: Designed to handle large volumes of data, vector databases maintain high search accuracy and response times even as the dataset size grows. Additionally, they typically offer mechanisms for parallel processing and distributed computing, ensuring that the demands of ever-growing data can be met effectively.

Machine learning and AI compatibility: The adoption of vector databases has surged in recent years owing to the massive growth of artificial intelligence (AI) and machine learning (ML) applications. Converting complex data into vector representations enables seamless integration with these algorithms, leading to valuable insights and predictions at an unprecedented scale.

Use-Cases for vector databases

The versatility and efficiency of vector databases make them valuable for a wide range of applications across various industries. Some of the prominent ones are:

Natural language processing (NLP): Vector databases facilitate semantic search and text analysis through efficient handling of word embeddings or document vectors. They enable tasks like document classification, sentiment analysis, and keyword extraction, helping organizations make sense of massive volumes of textual data from sources like social media, forums, customer interactions - and currently most importantly - LLM prompt inputs.

Image search and recognition: Image databases benefit immensely from the vector representation and similarity-based search capabilities of vector databases. They can effectively handle tasks such as reverse image search, object detection, and facial recognition, due to the inherent ability to identify similarities and patterns across high-dimensional data.

Recommendation systems: One of the critical components of modern e-commerce and content platforms is a robust recommendation engine. By using vector databases, these systems can efficiently analyze user preferences and content features to generate personalized and highly relevant recommendations to users in real-time.

Anomaly detection and fraud prevention: Sector-specific data points, such as financial transactions or user behavior, can be converted to vector representations for real-time analysis. The similarity-based search capabilities of vector databases enable rapid identification of unusual patterns or potential fraud, aiding organizations in mitigating risks.

Genomic research: In the field of genomics, researchers deal with complex biological data and must often identify similar genetic sequences or structures. Vector databases, with their powerful search capabilities, can accelerate this process with greater precision, paving the way for critical discoveries and advancements.

Multimedia content management: Vector databases can handle diverse content, including audio and video, by transforming them into vector representations. This ability democratizes content search and analysis, enabling seamless retrieval of media based on similarity, pattern recognition, or automatic content tagging.

These use-cases are just the tip of the iceberg, as vector databases have far-reaching applications across a plethora of industries. As more organizations recognize the potential of vector databases, their adoption and exploration will continue to push the boundaries of data management and analysis.

Vector databases and Large Language Models

While we already covered use-cases, the currently most prominent use-case might be usage of vector databases as part of LLM AI chains. Pinecone created a highly valuable blog post about exactly this topic - explaining vector database usage for LLMs. Have a look at their great article for more details into this specific topic.

pgvector - a PostgreSQL vector extension

pgvector is an open-source vector database extension for PostgreSQL. It supports:

- exact and approximate nearest neighbor search

- L2 distance, inner product, and cosine distance

- any language with a Postgres client

Some of the highlights are:

Straightforward integration: pgvector is easy to set up within an existing PostgreSQL environment, as it can be added as an extension to an existing database. This feature enables both new and long-time users of PostgreSQL to reap the benefits of vector databases without the need for major system overhauls.

Support for various distance metrics: pgvector comes with built-in support for multiple distance metrics, including Euclidean, cosine, and Manhattan distances. This versatility allows for a highly customizable similarity-based search and analysis tailored to specific application requirements.

Indexing support: The pgvector extension offers efficient indexing options for vector data, such as k-nearest neighbors (k-NN) search. As a result, users can achieve rapid query execution while maintaining high search accuracy, even with considerable growth in dataset size.

Accessible query language: As a PostgreSQL extension, pgvector leverages familiar SQL query syntax for vector operations. This approach simplifies the process of adopting vector databases for users with SQL knowledge and experience, and prevents them from having to learn a new language or system.

Active development and support: pgvector is frequently updated to ensure compatibility with the latest PostgreSQL versions and features, while the developer community continually works on enhancing its functionality. Consequently, users can expect a well-supported solution for their vector data needs.

Robustness and security: By integrating with PostgreSQL, one of the most reliable and secure RDBMSs available, pgvector inherits the same level of robustness and security features, enabling users to store and manage their vector data with confidence.

How to use pgvector

-

Install pgvector on your database server

1cd /tmp2git clone --branch v0.4.2 https://github.com/pgvector/pgvector.git3cd pgvector4make5make install # may need sudo -

In your database, run this command to enable the extension

1CREATE EXTENSION IF NOT EXISTS vector; -

Create a table storing the vectors

1CREATE TABLE items (id bigserial PRIMARY KEY, name, features vector(3)); -

Adding data works as follows:

1INSERT INTO items (features) VALUES ('[1,2,3]'), ('[4,5,6]'); -

As pgvector builds on top of postgres, a lot of the PG DML is available. Eg. to upsert, you might run:

1INSERT INTO items (id, features) VALUES (1, '[1,2,3]'), (2, '[4,5,6]')2ON CONFLICT (id) DO UPDATE SET features = EXCLUDED.features;

pgvector query operators

In pgvector, various query operators are available to perform different operations on vector data. These operators typically focus on calculating the similarity or distance between vectors, with some operators using different distance metrics. Here, we will discuss the commonly used operators in pgvector:

<->: This operator calculates the Euclidean distance between two vectors. The Euclidean distance is the straight-line distance between the points represented by the vectors in the multi-dimensional space. Smaller Euclidean distances indicate greater similarity between vectors, making this operator useful in finding and ranking similar items.

1SELECT id, name, features, features <-> '[0.45, 0.4, 0.85]' as distance2FROM items3ORDER BY features <-> '[0.45, 0.4, 0.85]';

<=>: The<=>operator computes the cosine similarity between two vectors. Cosine similarity compares the orientation of two vectors rather than their magnitude. It ranges from -1 to 1, with 1 indicating that the vectors are identical, 0 implying no correlation, and -1 meaning that the vectors point in opposite directions.

1SELECT id, name, features, features <=> '[0.45, 0.4, 0.85]' as similarity2FROM items3ORDER BY features <=> '[0.45, 0.4, 0.85]' DESC;

<#>: The (negative) inner product between two vectors can be computed using the<#>operator. The inner product denotes the magnitude of the an orthogonal projection of one vector onto another one. The sign determines whethere the vectors point in the same or opposite directions. Orthogonal vectors have an inner product of 0. Please take notice that pgvector - due to Postgres technical details - returns the negative inner product!

1SELECT id, name, features, features <#> '[0.45, 0.4, 0.85]' as distance2FROM items3ORDER BY features <#> '[0.45, 0.4, 0.85]';

You can select an appropriate operator based on your application requirements or the nature of your data. Some factors to consider while choosing an operator include preserving relative distances, emphasizing magnitude or orientation, and prioritizing specific dimensions over others. Note that, depending on your data and use case, the choice of operator may have a significant impact on the quality of search results and ultimately the effectiveness of your application.

NOTE: Additionally to query parameters, pgvectors offers functions to calculate l1 & l2 distances, inner product and cosine similarity, as described on the pgvector github page.

pgvector indexing

By default, pgvector performs exact nearest neighbor search, which provides perfect mathematical matches. While this is the most accurate way, it might be quite resource intense and lead to slow query response time. pgvector allows to trade some of the accuracy with performance by adding an index to use approximate nearest neighbor search.

To still have a good accuracy but with greatly enhanced performance:

-

Create the index after the table has some data

-

Choose an appropriate number of lists - a good place to start is rows / 1000 for up to 1M rows and sqrt(rows) for over 1M rows

-

When querying, specify an appropriate number of probes (higher is better for recall, lower is better for speed) - a good place to start is lists / 10 for up to 1M rows and sqrt(lists) for over 1M rows.

1BEGIN;2SET LOCAL ivfflat.probes = 10;3SELECT ...4COMMIT; -

Add an index for each distance function you want to use.

-

L2 distance

1CREATE INDEX ON items USING ivfflat (embedding vector_l2_ops) WITH (lists = 100); -

Inner product

1CREATE INDEX ON items USING ivfflat (embedding vector_ip_ops) WITH (lists = 100); -

Cosine distance

1CREATE INDEX ON items USING ivfflat (embedding vector_cosine_ops) WITH (lists = 100);

-

Bonus: pgvector on Timescale

Timescale just announced that their managed cloud service now also supports pgvector.

TimescaleDB is an open-source, time-series database designed for managing large-scale time-series data with the power and convenience of SQL. Built as an extension of PostgreSQL, it combines the proven reliability and robustness of PostgreSQL with optimizations specifically tailored to handle time-series data.

It is widely used in the Time-Series space and offers a superb cloud database experience. Many users therefore will already have a Timescale instance - now having access to pgvector allows them to create LLM AI applications for their time series use-cases. All without having to set up and manage yet another database "just" for the vectors.

The following guide assumes that you already have a running Timescale service set up.

-

If not already done, install psql as described in the official Timescale Blog

-

Connect to your database by using the connection command provided in the Timescale console

1psql "postgres://tsdbadmin@secret.tsdb.cloud.timescale.com:36055/tsdb?sslmode=require"

Now you are connected to the Timescale postgres database. Run the following command to enable the pgvector extension:

1CREATE EXTENSION IF NOT EXISTS vector;

That's it - now you have a fully managed pgvector-based vector database at your hands, in the same database where also your time series data reside.

Summary

In this blog post, we explored the importance of vector databases for managing high-dimensional data and their applications across various industries. We introduced pgvector, a versatile PostgreSQL extension that inherently supports vector data storage and search, presenting an accessible vector database solution. Using a practical guide, we demonstrated the process of creating tables using pgvector, inserting data, and querying for similar items. Furthermore, we discussed different query operators available in pgvector for calculating similarity measures, such as Euclidean distance, cosine similarity, and Manhattan distance.

Finally, we demonstrated how to use pgvector in your fully managed Timescale cloud database.

------------------

Interested in how to train your very own Large Language Model?

We prepared a well-researched guide for how to use the latest advancements in Open Source technology to fine-tune your own LLM. This has many advantages like:

- Cost control

- Data privacy

- Excellent performance - adjusted specifically for your intended use